Augmented Reality and Computer Vision in Navigation

By Eng (Dr.) Maheshi Dissanayake

Are you a Google maps user to find the best route to reach your destination? Have you seen the latest auto driven (driverless) vehicles?

Global Positioning System (GPS), a project initiated by the US Department of Defence in 1973, transformed the entire landscape of the navigation industry of the world. Since then, GPS has evolved from the state of a ‘military technological tool’ to become an application of paramount importance, to the daily life of civilians. GPS made a profound impact on the mode of modern-day transportation, making paper-based road maps almost obsolete. On the other hand, the Navigation industry made yet another tremendous leap during the present decade, by the introduction of driverless cars. These developments have made the applications of modern navigation systems visible, in a vast field of technology. Advancement in Artificial Intelligence (AI) has enabled the field of Navigation to attain great heights. In fact, Augmented Reality (AR) and Computer Vision (CV), have become crucial technologies to enhance various parameters in movement in given spaces.

Computer Vision (CV), an interdisciplinary study, focuses mainly on object identification. Modern Computer vision systems have the capability to derive meaningful information from visual inputs such as images and videos, and process this information to make appropriate recommendations. Hence, this acquired contextual information can easily be employed to assist navigation. In real life situations, a pedestrian about to enter a road crossing would be identified by the navigation system of the vehicle and will be warned to reduce the speed.

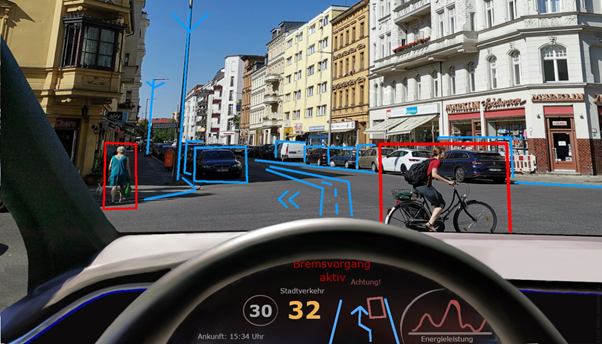

Augmented Reality (AR) is an enhanced version of the real-life physical world with a digital augmentation overlaid on it. When AR is applied for Navigation, the system identifies the physical environment through visual inputs and superimposes virtual components such as recommended route etc. on top of the road view to assist the driver. By showing virtual guides using smart phones, the AR technology created a more natural and simple alternative to physical maps. Furthermore, AR Navigation has the potential to function successfully even in enclosed environs where GPS fails to operate.

Harmony among AR and CV has improved the Navigation technology to enormously superior levels. With the compliments of the exceptional advancements in electronic and sensor technologies, a live video feed from a camera system receiving from the vehicle can be converted to provide necessary and sufficient information to implement an enhanced vehicle Navigation system in an automobile utilising radar sensors, Lidar (Light Detection and Ranging) sensor, and other Internet of Things (IoT) sensors.

The diversity brought into visual information space through camera, radar and lidar, helps the vehicle to capture a more perspective view of an event, perhaps better than the human eye. These three sensors see the same object in different perspective, hence they create redundancy, by providing overlapping information to the processing system. These overlapping information help the navigation system to make judgements as well as validate the verdicts, in turn overpowering the human eye. The navigation system is able to produce a 360-degree view of the surrounding by stitching together inputs from both wide field of view and long range cameras placed around the vehicle. A major disadvantage of camera sensor is the limitation in estimating distance and the high dependency on the intensity of light. Radar is able to supplement this short coming of the camera in low visibility environments. Although Radar itself is able to estimate speed and distance easily, it is unable to distinguish between different types of objects. On the other hand lidar can successfully create 3D point cloud view of the vehicles’ surrounding even in low light conditions, with shape and depth information. The rich data provided by these sensors are process, sensor fusion, using high-performance centralised AI computer to make driving decisions. The sensor fusion which depends on data from more than one sensor ensures reliability of the final decision.

Such an enhanced navigation system would provide a route, overlaid on live view of the road, with pedestrians, other vehicles and objects in the road, which are to be negotiated by the particular vehicle, masked or outlined. It will convert the windshield into a virtual display by circling and outlining the detected relevant objects such as traffic signs, pedestrians, obstructions and other objects lying ahead of the vehicle's path. Above described system, equipped with other IoT sensors, would be able to predict the possible action to be taken by the vehicle, ahead of time. By considering all the visual and sensory information, the navigation system would be able to predict possible collisions ahead. Eventually the ability of the vehicle itself to comprehend the oncoming driving scenario would help to improve the road safety.

Two of the key requirements for successful implementation of AR based CV navigation system are the quality and quantity of data. In any sphere of application of AI, data is the prime source of knowledge, and the accuracy and the dependability of the prediction becomes higher with the increase in data input. With a high volume of data, these navigation systems are capable of introducing many different features of the real world, thus improve the understanding of the surrounding, and reach insightful verdicts of the situation negotiated. However accessibility to user data has restrictions amid the rise of privacy concerns in modern lifestyles. Hence, one of the key obstructions encountered by any AR and CV base application is the scarcity of data. And this hinders the development as well as the adoptability of these architectures to different environments.

AR and CV finds applications in other navigation scenarios as well, and would become handy while navigating through difficult environments. For instance AR and CV can be employed to determine available parking spaces in a car park with exact information of the location along with the ability to park the required vehicle at a particular vacant space. They can be used in mobile robots, especially for navigation from remote distances in difficult terrains. The vision information received from inbuilt cameras of the robots can be coupled with computer generated virtual environments to guide the robot in humanly impossible environments, such as bomb defusing sites.

These navigation systems could be used under weak visibility conditions to the naked eye. It can predict the routes at night under low light, using information gathered from pictures and onboard cameras of other vehicles/devices which have traversed the same path at day light. Imagine being a pedestrian, guided from the mobile and its torch, yet with enhanced visual information as you walk through a dimly lit path.

The same technology can assist visually impair person to navigate with voice commands. In such applications the navigation system can act as a mediator between the physical environment and the visually impaired person, by providing useful information about the environment at each step.

With this many possible applications, has the AR and CV for Navigation has reached its pinnacle? No, still there is plenty of room for both technologies to improve to assist the navigation technology and their users. The biggest technological milestone reached by AR and CV in terms of navigation application is the driverless car. Yet, human safety is of major concern. Replicating the exact human visual system with human intelligence is a challenging task. Although the technology developed is at a significant level compared to that of a decade back, there is still room for improvement.

Is a completely safe autonomous vehicle, the peak of advancement in Augmented Reality and Computer Vision based Navigation? Only the future will reveal.

Eng (Dr.) Maheshi Dissanayake

Eng (Dr.) Maheshi Dissanayake

PhD(Surrey, UK), BScEng(Peradeniya, SL)

Chairperson IEEE Sri Lanka Section

Senior Lecturer, Department of Electrical and Electronic Engineering,

Faculty of Engineering, University of Peradeniya, Sri Lanka.