Ancient Intelligence (AI) to Artificial Intelligence (AI): A narrative

BY Dr. Damayanthi Herath

The Artificial Intelligence (AI) based chat bot, Chat GPT reached a landmark user base of one million users within 5 days [1]. An engineer would naturally find many use cases of the Generative AI (Gen AI) based tools; Example is ChatGPT: a chat bot utilizing text to text generation and allowing the user to provide a “prompt” and receive “response”. The prompt allows the user to perform various tasks such as asking questions to retrieve information, summarize text and improve writing.

Chat GPT emerged as a demonstration of the power of generative models, specifically the Large Language Models (LLMs): the deep neural network models that could manipulate language and how we express it: via text, speech or images. Effective usage of such tools in Education has been of interest to many including the University of Peradeniya, Sri Lanka which has now a policy in place on the use of generative AI tools in teaching and learning. How did we reach here and how long did it take? This write up is an attempt for a narrative walking across the past few decades of Artificial Intelligence with a specific focus on neural networks.

Seeking Intelligence

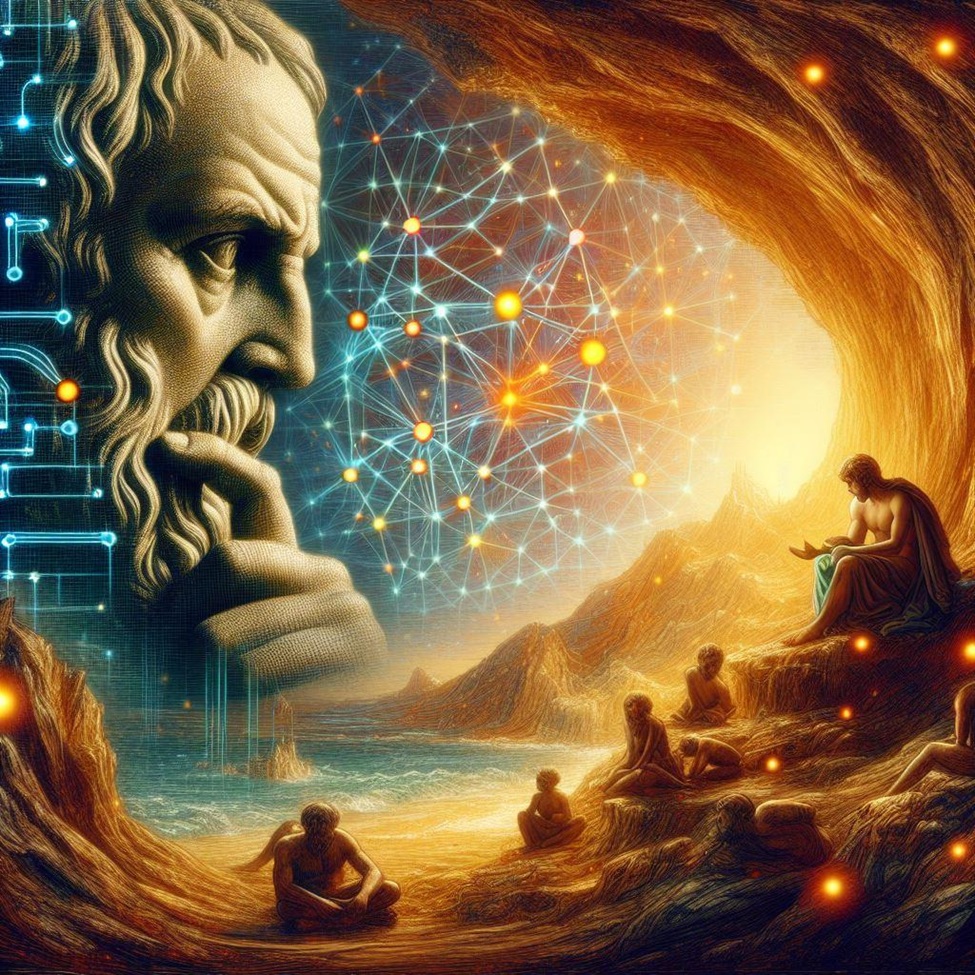

We, the “Sapiens” are named by a Latin word meaning “the one who knows”; what does it means to “know”? Different fields of study would define and study that question in different ways including studying “intelligence”. There has been interest in studying “intelligence” since ancient times. In 380 BCE, Plato talked about the famous allegory of the cave which can be related to the “representation learning” that is a key concept behind the Gen AI, which emerged in around 2019.

Definition of Artificial Intelligence

With the industrial revolution as the man focused on automation, a question was asked: “Can we build an intelligent machine?” In 1950, Alan Turing published the famous paper proposing an imitation game to detect machine intelligence [2]. The term “Artificial Intelligence” was coined in 1955 at a workshop at Dartmouth College, The USA by John McCarthy referring to “the science and engineering of making intelligent machines”

Artificial intelligence, Machine Learning and Deep Learning

One branch of study of AI is Machine Learning, which acts as a sensor to the digital world and expanded as a field of study due to the expansion of the digital world: the world of Internet of Things and big data. Machine Learning evolved as a branch of AI aiming to automated rule extraction from the data, which can later be used for prediction and forecasting the behavior of a system. The advancements of computing technologies made a drastic increase in the data making machine learning being considered equivalent to AI, while in theory it is only one way to achieve AI.

With intelligence, we think of the brain, as many who were interested in AI. Attempts were made to mimic intelligence by mimicking the functions of the brain drawing inspiration from many fields studying the brain such as neuroscience and psychology. The first attempt widely known is the McCulloch-Pitts model of a biological neuron being able to perform any given logic operation. Next was the “perceptron” proposing a model consisting of a neuron that generates output after sending the weighted sum of its inputs through an activation function (mimicking the biological neuron function) which can later be used for prediction? It was evident that the perceptron model struggles in a certain set of data, and a neural network stacking perceptrons in layers, thereby enabling it to model complex data was suggested. More the number of layers, more complexities it could deal with which lead to neural networks with layers of order of 10 s, 100s and more and referred as Deep Learning. Deep Learning soon became the most interesting branch in machine learning as the computing power was improved with the advent of Graphic Processing Units (GPUs) for Computing.

Generative AI with Large Language Models

Until recently, many applications as well as research focused on neural networks for a discriminative way of learning where the decision boundaries useful to discriminate the data at hand are learnt (e.g. learning the decision boundaries to tell the difference between a cat and dog given an image without worrying about underlying logic). A leap in progress in AI was made with a generative way of learning where the rules are learnt that can later be used to generate new data (e.g. learning the characteristics of a cat and a dog which can be used to generate images of cats and dogs). The latter makes use of representation learning mentioned above. Generative Neural Networks coupled with Natural Language Processing (NLP) led to Large Language models which are capable of learning the intricacies of one of the most powerful tools of humans, if not the most powerful one: the language. Here we are now: co-existing with AI tools that enable us to automate the tasks where language is often and effectively used while looking forward to what more we can do by improving AI further.

Postscript: While Gen AI tools are quite handy in summarizing and writing, this write up was done with zero Gen AI assistance with the intention of receiving valuable feedback from the reader to further improve the humane version of the writer, while the image in this article was generated using a Gen AI tool [3] with the intention to have feedback on the AI assisted humane version of the writer. The writer would highly appreciate feedback emailed to damayanthiherath@eng.pdn.ac.lk.

- https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/ (Accessed on May 2024)

- Alan Turin. Computing machinery and intelligence (1950).

- Microsoft CoPilot Image Creator (Accessed in May 2024)

Eng. Dr. Damayanthi Herath

Eng. Dr. Damayanthi Herath

Department of Computer Engineering, University of Peradeniya (UoP) /Data Engineering and Research (DEAR) Group, UoP